Data Center Infrastructure Management (DCIM) solutions monitor, evaluate, and manage the utilization and energy consumption of IT equipment such as servers, storage, and network switches, as well as facility infrastructure components like power distribution units and cooling systems. These solutions, which also involve analytics and metrics on the performance and function of the data center components, are growing in popularity. Per a report from Sandler Research, the global DCIM market is expected to grow at a compound annual growth rate of 14.68 percent from 2016 to 2020.

However, DCIM solutions are not the only software systems key to efficiently operating data centers, so facility managers have been increasingly demanding integration from manufacturers.

TYING DATA TOGETHER

“We’re seeing everything become more integrated,” said Saar Yoskovitz, co-founder and CEO, Augury. “The companies that manage or operate these data centers expect that these monitoring systems tie into the DCIM, so they have one dashboard or one controller that gets all the information and provides them with the right insights to make the right decision, to take the right action.”

Click chart to expand

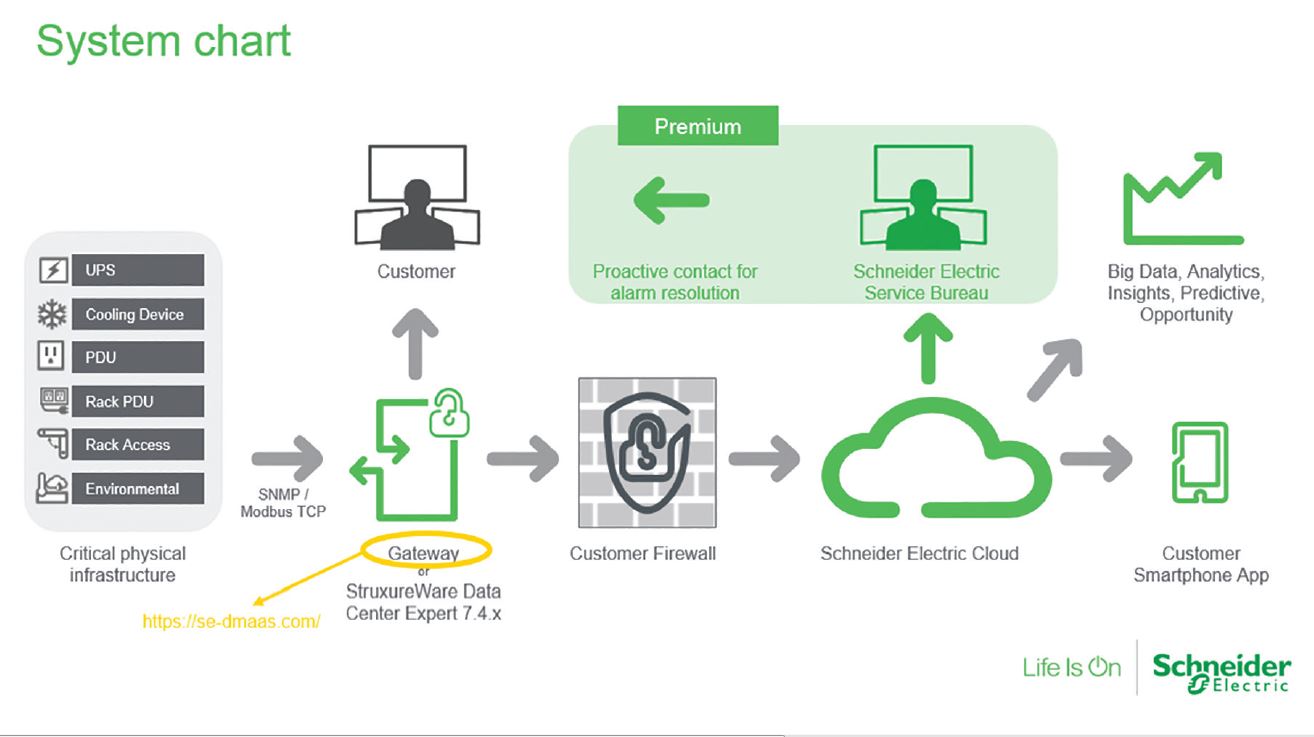

PROACTIVE INSIGHTS: EcoStruxure IT delivers a cloud-based architecture purpose-built for hybrid IT and data center environments. The vendor-agnostic architecture delivers a new standard for proactive insights on critical assets that impact the health and availability of an IT environment with the ability to deliver actionable real-time recommendations to optimize infrastructure performance and mitigate risk.

Advancements in technology have allowed sensors to be installed on all the machines within a data center — something that was unheard of just five years ago, Yoskovitz noted.

“To this day, in some of these facilities, they do mostly preventive maintenance,” he explained. “They have people who walk around the facility, listen to noises, do visual inspections. But that doesn’t really cut it for the more cutting-edge data centers that host critical data. The fact is that we are now able to deploy predictive maintenance at a much wider scale, and they want these sensors to integrate into their main management system.”

According to Yoskovitz, there are two things that should be monitored in data centers: the mechanical health of the assets and the operational conditions. Monitoring health of the assets ensures facility managers know as soon as possible when something is malfunctioning, so it can be fixed immediately, keeping the cost of the repair down. While monitoring operational conditions ensures optimization in order to extend the life of the mechanical equipment and increase efficiency.

“Some DCIMs are off the shelf, and some are heavily customized or built by the company that owns the data center,” he said. “They tweak the operating conditions of the infrastructure in order to adhere to the needs of the servers, according to the temperature outside and the load of the computers themselves.

“In your facility, you can’t afford to have a person to look at all the data,” he continued. “You need a computer to crunch all the data to find trends and give you insights into when you need to intervene.”

MAKING SENSE OF BIG DATA

According to Joe Reele, vice president, Data Center Solution Architects, Schneider Electric, the old saying of “knowledge is power” holds true.

“In today’s very sophisticated and complex infrastructure systems, when you look at the data center as a whole, starting from the utility transformer outside of the building to the generator plant to the distribution within the building to the critical power systems — UPS and static switches, PDUs — all the way down the plug strip within the rack, that is an awful lot of data to understand. Having data or having monitoring is one thing, but being able to make sense of that data is really more important than having the data.”

However, monitoring and sensor data in the data center is almost a must-have, Reele noted, because the cost of downtime is significant.

“Risk management is also a big part of all this monitoring, along with capacity management,” he said. “The sensor data and the monitoring systems today are helping businesses make decisions more than ever before — when do I add, when do I contract, when do I consolidate? All of this data is very important to run a very efficient, cost-effective data center — and also, to have a risk profile equal to your risk appetite.”

Reele said he is increasingly seeing the convergence of IT technology and physical infrastructure technology.

“Typically, they’ve been disparate,” he said. “They are now becoming integrated and converged. What I mean by that is, we’re now able to say, ‘We’re seeing a 2°F rise in chiller water temperature because computing utilization just went from 20 to 80 percent.’ In years past, you could never really make that correlation. Today, we’re really looking at the data center as one entity versus a bunch of separate ones. That’s a very significant trend.

“It’s kind of like having two arms on the body, but you have two individual heads controlling each arm, and you’re trying to play baseball,” Reele continued. “We have come to the realization that we can’t play baseball with one body and two heads. Our body has got to be in unison with our one head; otherwise, we’re not going to be very coordinated, and we’re going to look foolish. So we’ve now realized we cannot run a data center with two heads. We have to run a data center with one body that’s got two arms: a facility arm and IT arm, but we’re going to run it with one head.”

One of the challenges facing data centers is while the ability to monitor 100,000 different things has been around for quite some time, making 15 to 20 different systems work together has been somewhat of an Achilles heel, not only for data centers but any large facility, Reele explained.

“Having seamless integration has always been a big issue,” he said. “Having open systems on a standard protocol that snap together and assimilate information is critical.”

SMARTER, MORE EFFICIENT OPERATION

Data centers have always been very complex, critical environments where uptime is an absolute requirement, noted Jay Hendrix, data center portfolio manager, Siemens Industry Inc.

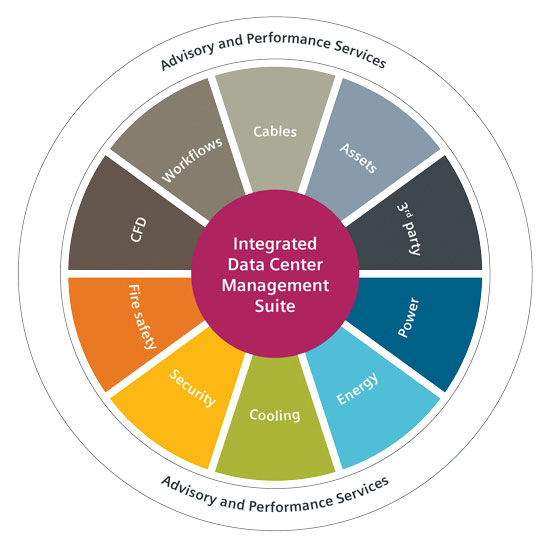

FULL TRANSPARENCY: Siemens Building Technologies’ Integrated Data Center Management Suite (IDCMS) offers full transparency into entire facility infrastructures, enabling a solid decision-making basis to provide operational and efficiency improvements. It integrates the functionalities of a BMS, energy power management systems (EPMS), and data center infrastructure management (DCIM), which enables users to optimize processes and reduces human errors and complexity.

“That complexity will only increase with the growth of digitalization and demand for more actionanable data. With so many interconnected systems with many moving parts within a data center, it’s really too much to ask any data center facility manger to physically monitor and manage all of these systems and components manually,” Hendrix said. “For monitoring, the software tells you the story. You need the sensor inputs to provide the data from all the different systems to tell you about the health and the performance of all the equipment that supports the white space equipment (the critical area of a data center) and infrastructure systems, such as power, cooling, automation, fire detection, and physical security. It’s kind of like flying a plane: You can fly by sight as long as it’s clear outside. But what happens when you fly into a cloud? You need the instrumentation to tell you what’s happening.”

According to Hendrix, data centers are not going to get all the functions they need from a single software system.

“It really takes an integrated data center management approach to bundle all necessary systems such as automation, DCIM, and more togehter,” he said. “There are a lot of market developments going on right now with artificial intelligence and analytics, and that’s really where the future is headed.

“Additionally, we’re starting to see more demands for cloud applications,” Hendrix continued. “Data center owners and customers have been a little reluctant to use cloud applications, but they’re opening up to the idea. Being able to leverage cloud resources and software solutions for dashboards and analytics is going to become more prevalent going forward.”

One of the things that hasn’t changed in data centers is that uptime is still very important, Hendrix noted, citing a study administered by the Uptime Institute, which confirmed around a third of all reported outages cost more than $250,000, with many exceeding $1 million.

“Uptime remains extremely important, but there’s also more of an emphasis on efficiency — having your data center work and operate more efficiently and using your resources wisely,” he said. “So, monitoring and collecting data and managing that data in order to make it more efficient and improve uptime and people utilization is important. Data centers are becoming more autonomous environments, which is driving the need for monitoring and more sensors within the data center.

“It’s about becoming smarter, more efficient, and utilizing resources more appropriately,” Hendrix concluded.

Publication date: 8/13/2018

Want more HVAC industry news and information? Join The NEWS on Facebook, Twitter, and LinkedIn today!

Report Abusive Comment